AI Data Security Solutions: Reference Architecture Guide

Learn how to design AI data security solutions for SaaS, cloud, and LLM workflows using proven reference architectures and controls.

AI data security solutions are best understood not as a single tool, but as an architectural response to how data now moves inside modern organizations. Unlike traditional applications, AI systems continuously ingest, transform, and emit data across SaaS platforms, cloud infrastructure, APIs, and large language models. Every prompt, embedding, response, and downstream action becomes a potential data exposure point. In this environment, security failures rarely happen at rest; they happen in motion.

Traditional security architectures struggle here because they were designed for static boundaries and predictable data paths. Network controls assume north–south traffic; endpoint tools focus on devices; legacy DLP assumes files and emails. AI breaks all of those assumptions. Sensitive data flows east–west between SaaS apps, is copied into LLM prompts, enriched by cloud services, and replayed into tickets, chats, and logs. Policies may exist, but enforcement often happens too late or not at all.

This is where a reference architecture for ai data security solutions becomes essential. A reference architecture bridges the gap between governance intent and technical enforcement by defining how identity, data discovery, classification, policy, and real-time controls work together across AI workflows. Rather than debating products or vendors, this guide focuses on how to design and deploy data security for AI systems in a way that scales across SaaS, cloud, and LLM-driven workloads.

AI data security solutions, when viewed architecturally, are not products or platforms; they are a coordinated system of controls designed to protect sensitive data as it moves through AI-driven workflows. This distinction matters because AI introduces continuous, non-linear data movement across SaaS applications, cloud services, APIs, and LLMs. In this context, ai data security solutions exist to translate governance intent into technical enforcement across the full lifecycle of AI data usage.

From an architecture perspective, these solutions focus on protecting data before it is exposed to AI systems, while it is actively processed by models, and after outputs are generated and reused downstream. The emphasis is not on detection after the fact, but on enforcing data security for AI at runtime, where risk actually materializes.

Key architectural characteristics of AI data security solutions include:

A coordinated control plane, not a single control: AI data security solutions combine identity context, data discovery, classification, policy logic, and enforcement into a unified flow. Each control is ineffective in isolation; together, they form an end-to-end system that follows data across AI workflows.

Coverage before, during, and after AI usage: Sensitive data must be governed before it enters prompts, during inference and model interaction, and after outputs are generated and propagated into SaaS tools, tickets, logs, or storage. Architecture must account for all three phases, not just input filtering.

Runtime policy enforcement instead of post-incident response: Traditional approaches rely on alerts and remediation after exposure has occurred. AI data security solutions enforce policy inline; blocking, redacting, or transforming sensitive data as it moves through AI systems, rather than reacting once the data is already leaked.

Alignment with how AI systems actually process data: Controls are mapped to real data paths such as prompt injection, API calls, embeddings, and response reuse, rather than abstract file or network boundaries. This is foundational for enterprise AI data security in production environments.

Architecturally, the defining trait of AI data security solutions is that they treat AI as a live data-processing pipeline, not a static application. By focusing on coordinated controls and runtime enforcement, organizations can apply ai data security measures that scale with AI adoption instead of breaking under it.

AI workflows change how data moves, which means AI data security needs a different architecture, not small upgrades to legacy tools. Traditional security assumes predictable systems, structured data, and clear boundaries. AI breaks all three, creating new blind spots if old controls are reused.

Why AI requires a new security model:

What this means in practice:

Bottom line:

AI workflows are continuous, distributed, and unpredictable. Effective AI data security must move from perimeter-based inspection to inline, data-centric controls that follow data across prompts, APIs, inference, and downstream reuse. Without this shift, more tools do not equal better protection.

Effective ai data security solutions are built as layered architectures rather than isolated controls. Each layer addresses a distinct class of risk, but none is sufficient on its own. The reference architecture works because these layers are tightly coordinated; identity informs policy, data classification drives enforcement, and monitoring closes the loop with governance. This layered approach reflects how data actually flows through AI-enabled enterprises.

The identity and access layer establishes who and what can interact with AI systems, forming the foundation for all downstream data security for AI controls. In AI environments, identities extend well beyond human users, which makes this layer both broader and more complex than traditional IAM.

Key elements of this layer include:

Human and non-human identities

Users, service accounts, background jobs, and AI agents all generate prompts and consume outputs; each must be explicitly modeled and governed.

API keys, service accounts, and AI agents

Machine identities often have persistent, high-volume access to AI systems; unmanaged keys become silent risk multipliers.

Least-privilege access to AI systems

Access should be scoped by data sensitivity, model capability, and workflow purpose, not broad convenience.

By anchoring AI access in identity context, organizations ensure that later ai data security measures can evaluate not just what data is moving, but who or what is moving it.

AI cannot be secured if organizations do not know what data exists or where it lives. The data discovery and classification layer provides visibility across SaaS applications, cloud infrastructure, and underlying data stores that AI systems can access.

This layer focuses on:

Discovering sensitive data across SaaS, cloud, and data stores

AI workflows often pull from CRM systems, ticketing tools, file storage, and databases simultaneously.

Classifying structured and unstructured data

Free text, documents, images, and attachments must be analyzed alongside tables and records to support enterprise AI data security.

Identifying AI-accessible data sources

Not all sensitive data is equally exposed; the architecture must surface which repositories are reachable by AI tools and automations.

Accurate discovery and classification transform AI security from guesswork into enforceable policy, enabling controls to follow data rather than infrastructure.

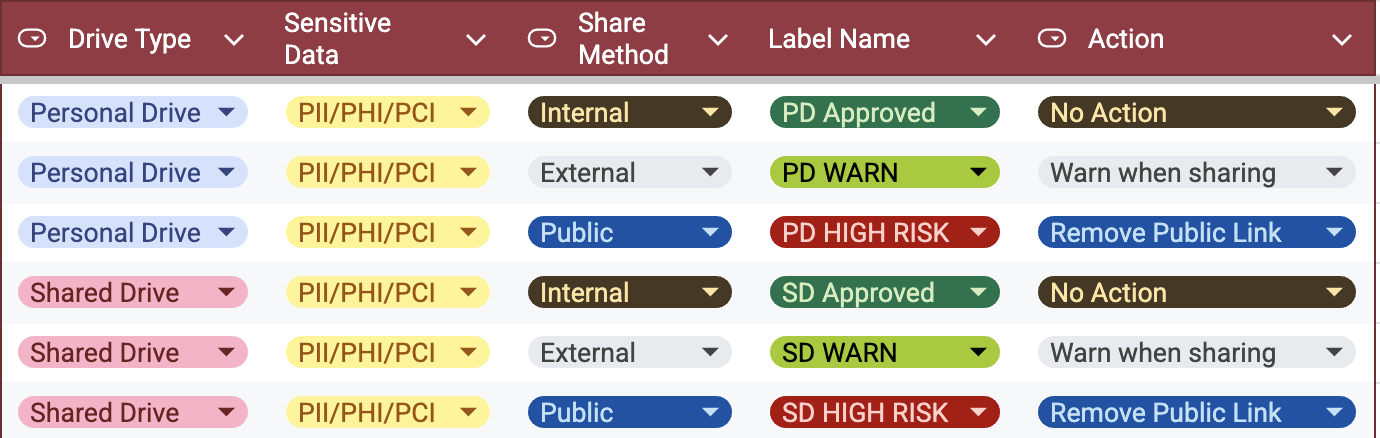

The policy and governance layer translates organizational intent into enforceable rules that guide AI behavior. This is where ai data security solutions move from visibility to control, defining what is allowed under which conditions.

Core capabilities include:

Context-aware policies

Decisions incorporate data type, identity, destination, and workflow context instead of static allow or deny lists.

AI-specific rules

Policies govern prompt inputs, model interactions, and output handling, accounting for the unique risks of inference and generation.

Compliance mapping

Governance rules align with regulatory requirements such as GDPR, HIPAA, and PCI without relying on manual interpretation.

This layer ensures that AI usage aligns with both business objectives and regulatory obligations, creating consistency across diverse AI workflows.

The real-time enforcement layer is where architecture proves its value. Unlike post-incident controls, this layer actively protects data as AI workflows execute, enforcing policy at the moment risk appears.

Key functions of this layer include:

Inline inspection of prompts and responses: Prompts and model outputs are evaluated in real time, before sensitive data is transmitted or persisted.

Redaction, masking, or blocking before data leaves: Controls act preventively, reducing exposure rather than alerting after leakage.

Enforcement inside SaaS and AI workflows: Protection follows data across chats, tickets, documents, APIs, and LLM interactions.

By enforcing policy inline, organizations achieve data security for AI that scales with usage instead of lagging behind it.

The final layer closes the feedback loop by providing continuous oversight and operational response. AI systems evolve rapidly; monitoring ensures that security posture evolves with them.

This layer delivers:

Continuous monitoring of AI data usage

Organizations gain visibility into how data flows through AI systems over time, not just at deployment.

Audit logs for compliance and accountability

Detailed records support regulatory audits and internal reviews without reconstructing events manually.

Incident response workflows for AI exposure

When policy violations occur, teams can investigate, contain, and remediate quickly.

Together, these capabilities ensure that ai data security measures remain effective as AI adoption grows and workflows change.

When implemented as a cohesive system, these five layers form a practical reference architecture for enterprise AI data security. The strength of the model lies not in any single layer, but in how identity, data, policy, enforcement, and monitoring reinforce one another across SaaS, cloud, and LLM workflows.

SaaS applications are where most AI data leakage begins, not because they are insecure by default, but because they sit at the intersection of people, automation, and Most AI data leakage starts in SaaS applications like Slack, Zendesk, Salesforce, and Google Workspace. These tools sit where people, automation, and AI meet, and they are directly connected to AI features such as copilots, summarization, and drafting.

Why SaaS is the biggest AI risk surface:

Why endpoint-only DLP fails:

What works instead:

Bottom line:

AI data security for SaaS must live inside SaaS workflows, not on endpoints or networks, to stop sensitive data exposure in real time.

AI Data Security for Cloud and Data Infrastructure

Cloud infrastructure is the backbone of enterprise AI. Data lakes, warehouses, and object storage feed AI models during training and inference, which means AI data security must extend into the cloud, not stop at SaaS.

Why cloud infrastructure creates AI risk:

What effective AI data security in the cloud requires:

Why this is different from traditional cloud security:

👉 Read our blog on Cloud DLP Solutions

LLM and GenAI workflows introduce a class of risk that does not exist in traditional applications; data is exchanged dynamically through prompts and responses, often without persistent structure or predictable behavior. As a result, ai data security solutions for LLMs must focus on runtime control rather than static configuration or retrospective analysis. This is where architectural rigor matters most.

At the core of LLM security is prompt inspection. Prompts are the primary interface between humans, systems, and models, and they frequently contain sensitive information such as PII, PHI, credentials, or proprietary data. Because prompts are ephemeral and generated in real time, they bypass file-based and perimeter-based controls. Effective data security for AI requires inspecting prompt content inline, before it reaches the model.

Equally important is output validation. LLM responses may include sensitive data copied from prompts, inferred from context, or reconstructed from training signals. Since outputs are non-deterministic, security cannot assume that safe inputs always produce safe results. AI data security solutions must therefore evaluate outputs in real time, applying the same rigor as input inspection.

Context-aware redaction connects inputs and outputs to policy. Rather than blanket blocking, enforcement decisions consider who initiated the request, which model is used, what data types are involved, and where the output will be sent. This allows organizations to apply precise ai data security measures that protect sensitive data while preserving legitimate AI usage.

Crucially, these controls must be model-agnostic. Security architecture cannot be tightly coupled to a single LLM or provider; enterprises routinely use multiple models across vendors and deployment types. Model-agnostic enforcement ensures consistent governance regardless of where inference occurs.

This is why AI usage monitoring alone is insufficient. Monitoring can show that sensitive data was used after the fact, but it does not prevent exposure. In AI workflows, damage occurs at the moment data is sent or generated. Runtime enforcement is mandatory because prevention must happen inline, not during a post-incident review.

Compliance pressure is often the catalyst for AI security initiatives, but compliance should never be mistaken for architecture. AI data security solutions enable compliance outcomes by enforcing controls consistently across AI workflows; they do not replace regulatory frameworks themselves.

For GDPR, architecture supports data minimization and purpose limitation by ensuring that only necessary data types are included in AI prompts and that outputs do not propagate personal data beyond approved use cases. Runtime inspection and redaction reduce unnecessary exposure while preserving functional AI workflows.

For HIPAA, AI data security measures focus on PHI handling inside AI workflows. This includes inspecting prompts that originate from clinical notes, support tickets, or internal tools, enforcing strict access controls, and ensuring that outputs containing PHI are appropriately governed before entering downstream systems.

For PCI DSS, controls address cardholder data appearing in AI prompts or outputs. Inline detection and redaction prevent payment data from being processed by AI systems that are not designed or approved for such use, reducing scope and audit burden.

Across all three, the key point is architectural consistency. When identity, data classification, policy, and enforcement are unified, compliance becomes an outcome of correct system design rather than a manual overlay. Enterprise AI data security succeeds when regulatory alignment is embedded into how AI workflows operate by default.

To make the reference architecture concrete, it helps to walk through an end-to-end AI data flow as if explaining a diagram in words. This sequence illustrates how ai data security solutions operate in practice across SaaS, AI, and downstream systems.

This step-by-step flow demonstrates why AI security must be architectural. Each stage reinforces the next, ensuring that ai data security measures protect data throughout its lifecycle rather than at a single checkpoint. When implemented cohesively, the reference architecture turns AI from a compliance risk into a governed, scalable capability.

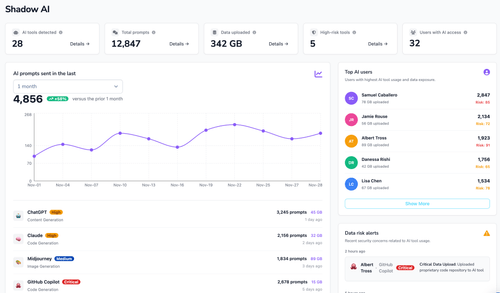

Within an AI data security reference architecture, Strac occupies a clear architectural role rather than a category-defining position. Its function is not to replace governance, identity, or compliance strategy; it is to operationalize those decisions through real-time enforcement, discovery, and remediation across the systems where AI data actually flows. This distinction is critical to understanding how ai data security solutions work in practice.

At an architectural level, Strac primarily strengthens the real-time enforcement layer, while tightly integrating with data discovery and classification signals upstream. This placement allows organizations to move from policy definition to runtime control without redesigning their entire security stack.

From an enforcement perspective, Strac operates inline within SaaS applications, cloud services, and LLM workflows. Prompts, messages, files, tickets, and AI-generated outputs can be inspected as they move, enabling redaction, masking, or blocking before sensitive data leaves its point of origin. This aligns directly with the requirement that data security for AI be preventive rather than reactive.

Strac also plays a critical role in discovery and remediation. By continuously discovering sensitive data across SaaS tools, cloud storage, and data services, it provides the contextual signals needed for enforcement decisions. Classification results are not static reports; they actively inform how AI interactions are governed at runtime. When violations occur, remediation actions are applied automatically, reducing reliance on manual intervention or delayed response.

Coverage across SaaS, cloud, and LLM workflows is essential in modern AI architectures because data rarely stays in one layer. Strac’s integrations allow enforcement logic to follow data as it moves from collaboration tools into AI prompts, from cloud repositories into inference pipelines, and from model outputs back into enterprise systems. This continuity is what enables enterprise AI data security at scale.

A defining architectural advantage is Strac’s agentless integration model. Rather than deploying endpoint agents or custom code, controls are applied through direct integrations and APIs. This reduces friction for platform teams, shortens deployment timelines, and minimizes operational overhead, all while maintaining consistent enforcement. In architectural terms, agentless design lowers the cost of extending ai data security measures across new AI use cases as they emerge.

Viewed through the reference architecture lens, Strac functions as the connective tissue between governance intent and real-world AI execution. By anchoring enforcement where data moves; across SaaS, cloud, and LLM workflows; it enables organizations to implement AI data security as a living system rather than a static set of policies.

AI data security cannot be solved by adding another tool to an existing stack; it must be designed as an architecture that reflects how AI systems actually process and move data. As AI adoption accelerates across enterprises, ai data security solutions must evolve from static controls into coordinated systems that operate across SaaS platforms, cloud infrastructure, and LLM workflows. Security decisions made in isolation will consistently fail in environments where data is fluid, unstructured, and continuously reused.

Prevention must happen inline. Monitoring, alerting, and post-incident remediation provide visibility, but they do not stop exposure at the moment it occurs. In AI workflows, sensitive data is at risk the instant it enters a prompt or is generated in a response. Data security for AI therefore depends on real-time inspection, policy evaluation, and enforcement embedded directly into the flow of prompts, APIs, and outputs.

SaaS applications and LLMs redefine traditional data perimeters. Collaboration tools, ticketing systems, file platforms, and AI services now function as shared execution environments where data moves laterally and automatically. Endpoint and network-centric assumptions no longer hold. Enterprise AI data security must follow data wherever it travels, not where security teams expect it to stay.

Finally, effective AI data security solutions coordinate discovery, policy, and enforcement as a single system. Discovery without enforcement creates awareness but not protection. Policy without context creates friction or false confidence. Enforcement without classification creates blind risk. When these elements are architected together, organizations can apply ai data security measures that scale with AI innovation while maintaining control, compliance, and trust.

An AI data security solution is not a standalone product category; it is an architectural set of coordinated controls that protect sensitive data before, during, and after AI usage. In practice, ai data security solutions combine identity context, data discovery and classification, policy governance, and inline enforcement so sensitive information does not leak through SaaS workflows, cloud pipelines, or LLM prompt and response flows. The point is prevention at runtime, not detection after exposure.

AI data security solutions work by inserting control points directly into the data paths that AI workflows use every day. Instead of treating AI as a separate system, the architecture follows data from SaaS to APIs to models and back into enterprise tools, applying data security for AI continuously.

When these steps run as a single system, the organization gets consistent protection without relying on delayed alerts or manual clean-up.

AI workflows require layered controls because risk emerges across identity, data, policy, and runtime execution. Enterprise AI data security fails when organizations deploy one layer and assume it covers the whole AI lifecycle.

This layered model is what turns governance from intent into enforceable behavior.

LLM prompts are where sensitive data most commonly crosses into AI systems, so ai data security measures must protect prompts in real time. The architecture inspects prompt content as it is generated, applies context-aware policies, and prevents restricted data from being transmitted to the model in the first place.

A strong control path typically includes inline prompt inspection, classification-driven decisions, and context-aware redaction or blocking based on user identity, data type, model destination, and workflow purpose. Output validation matters too, because even “safe” prompts can produce unsafe responses in non-deterministic systems. The result is data security for AI that prevents leakage instead of documenting it.

Yes, but compliance should be treated as an outcome of architecture, not the architecture itself. AI data security solutions support compliance by enforcing consistent controls on how regulated data is used in AI workflows, and by producing audit evidence that proves those controls are operating.

When identity, discovery, policy, enforcement, and logging are coordinated, compliance becomes a byproduct of correct AI workflow design rather than a manual retrofit.

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)