How AI is Reshaping Access Control

Discover how AI is reshaping access control. Discover why legacy approaches fail, and how modern platforms like Strac approach AI access control.

AI access control has become one of the most urgent challenges in the world we live in. As companies deploy generative AI across SaaS tools, internal workflows, and customer-facing applications, traditional access control models are breaking down.

Static role-based permissions and identity-only controls were never designed to govern real-time AI data flows. Prompts, uploads, generated outputs, and API calls now create entirely new exposure paths that bypass legacy controls.

This guide explains what AI access control really means today, why legacy approaches fail, and how modern platforms like Strac approach AI access control through enforceable AI data governance, DSPM, and real-time DLP.

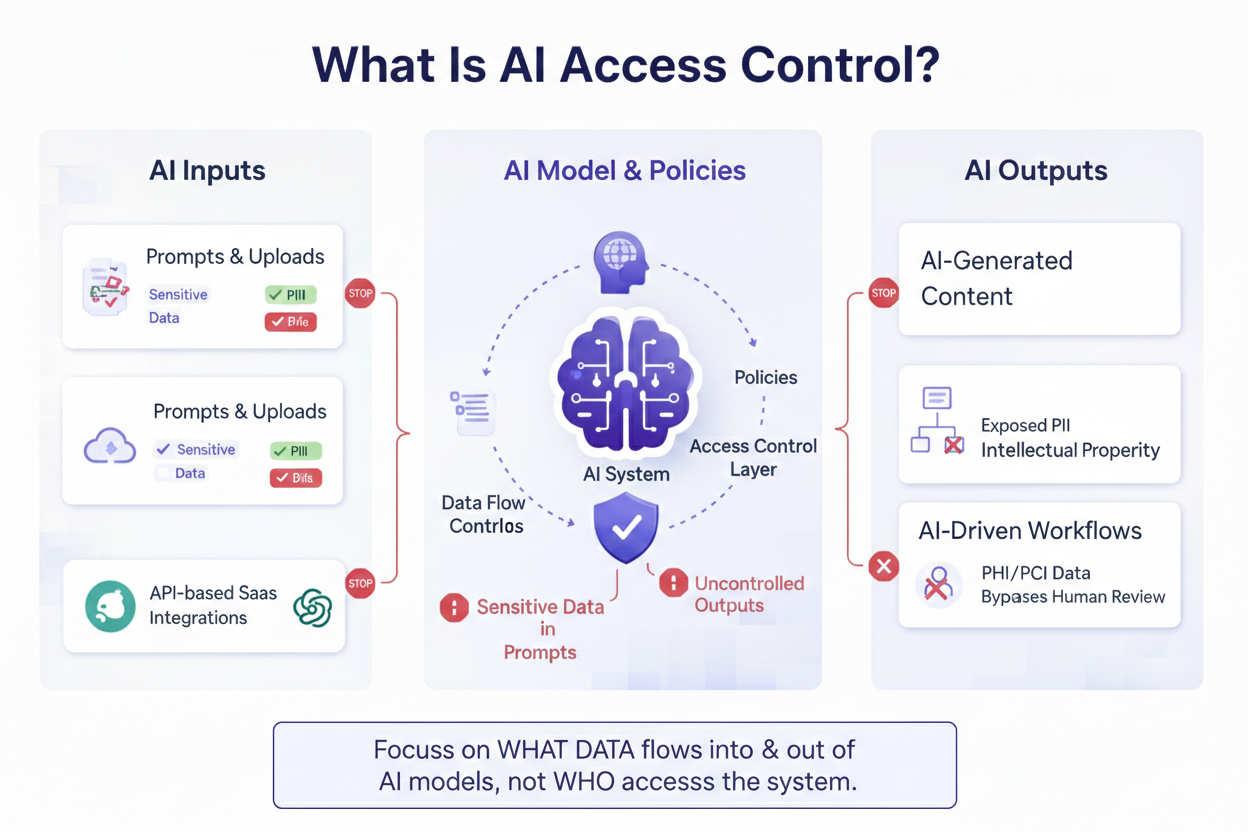

AI access control refers to the policies and technical controls that determine what data AI systems can access, process, and generate, and under what conditions. Unlike traditional access control, which focuses on who can access a system, AI access control focuses on what data is allowed to flow into and out of AI models.

In practice, this means controlling:

Without AI-native controls, organizations risk leaking regulated or proprietary data even when identity and authentication are technically “correct.”

Traditional access control systems were built for static environments; AI operates dynamically. This mismatch creates dangerous blind spots.

IAM and RBAC determine whether a user can access ChatGPT, Copilot, or an internal AI tool; they do not inspect what the user sends or what the model returns. A fully authorized employee can still leak customer data through a prompt in seconds.

CASB and legacy DLP tools often sit at email, network, or endpoint layers. AI workflows live inside SaaS applications and APIs, where data moves in real time and never touches traditional inspection points.

AI data exposure occurs during:

Access control that only evaluates permissions at login time is irrelevant to these risks.

Effective AI access control is impossible without AI data governance. Governance defines what data is sensitive, where it exists, and how it should be handled; access control enforces those rules in real time.

AI data governance includes:

Strac’s AI data governance approach treats AI as a first-class data surface, not an exception layered onto legacy DLP.

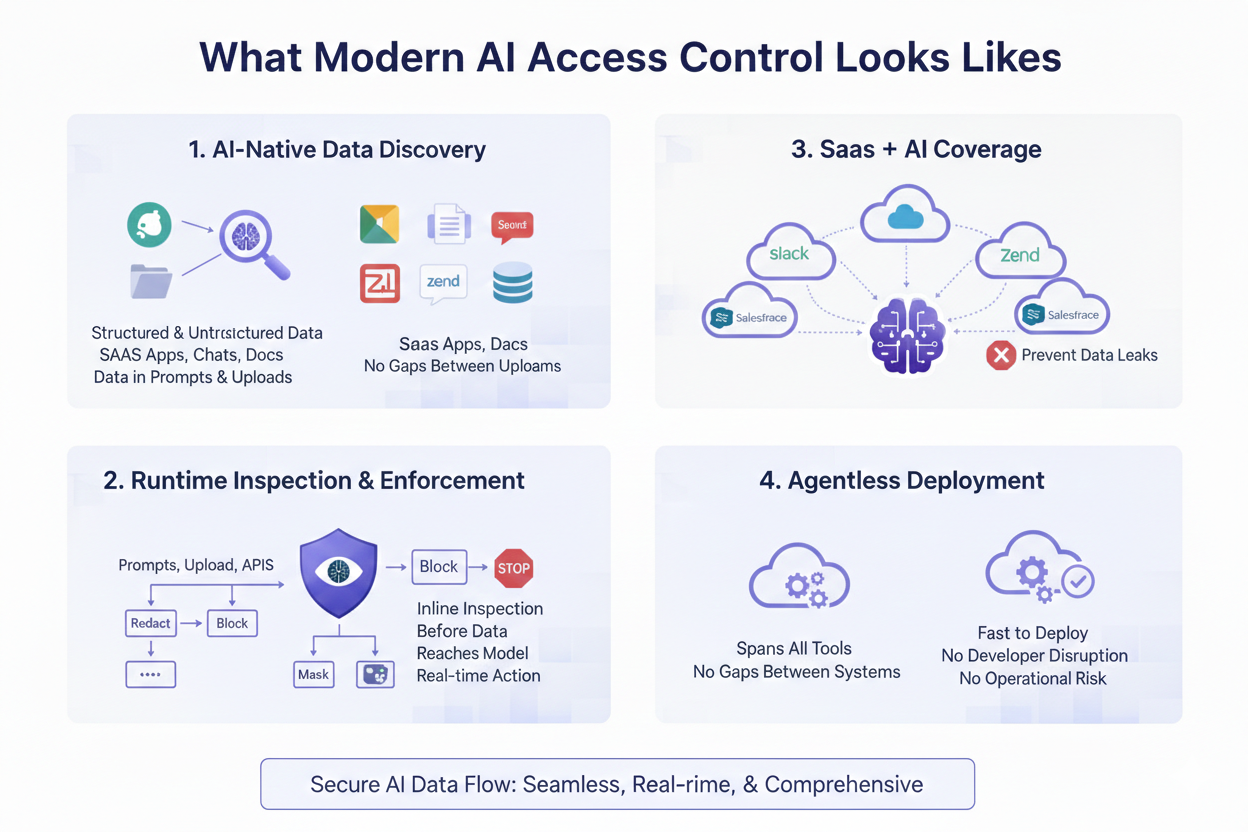

AI access control starts with knowing what data could enter an AI system. This includes structured and unstructured data across SaaS apps, tickets, chats, documents, and attachments. Discovery must account for data likely to be pasted into prompts, not just stored in databases.

Policies must be enforced before data reaches the model, not after an alert is generated. This requires inline inspection of prompts, uploads, and API calls with the ability to redact, block, or mask sensitive content in real time.

AI does not operate in isolation. Effective access control spans Slack, Google Workspace, Salesforce, Zendesk, internal tools, and AI integrations simultaneously. Gaps between tools are where data leaks occur.

AI access control must be fast to deploy and easy to scale. Agent-heavy approaches slow adoption and introduce operational risk. Agentless architectures allow security teams to enforce controls without disrupting developers or employees.

Strac continuously discovers and classifies sensitive data across SaaS applications, cloud storage, and AI-related workflows. This creates the visibility layer required for meaningful access control, without deploying endpoint agents or custom code.

Unlike alert-only tools, Strac enforces policies inline. When sensitive data appears in an AI prompt, upload, or response, Strac can redact, mask, block, or quarantine content automatically; not hours later in a report.

AI access control fails if enforcement is delayed. Strac’s AI DLP inspects data flows at runtime, preventing sensitive information from ever reaching external or internal AI models when policy conditions are violated.

Strac applies the same access control logic across Slack, email, CRM, support tools, cloud storage, and AI integrations. This eliminates the blind spots created by fragmented security tools and inconsistent policies.

AI access control is increasingly scrutinized by regulators. Frameworks like GDPR, HIPAA, PCI DSS, and emerging AI regulations require demonstrable controls over how data is processed by automated systems.

Strac supports compliance by:

This shifts AI governance from documentation to provable control.

AI access control is no longer optional, and it cannot be solved with legacy IAM or DLP alone. As AI becomes embedded across SaaS workflows, organizations must control data, not just users.

The winning approach combines AI data governance, DSPM, and real-time enforcement. Platforms like Strac demonstrate how agentless, runtime AI access control can protect sensitive data without slowing innovation; by enforcing policy where AI risk actually occurs.

If your access control strategy cannot see, inspect, and remediate AI data flows in real time, it is not truly securing AI at all.

No; AI access control solves a fundamentally different problem. IAM and RBAC decide who can access a system, but AI access control governs what data is allowed to flow through AI systems at runtime. A user can be fully authenticated and authorized, yet still leak PII, PHI, or IP through an AI prompt or upload. AI access control focuses on data context, not just identity.

AI access control protects data in motion, not just systems. In practice, that means controlling sensitive data across:

If your controls do not inspect these flows in real time, sensitive data can still escape even when users “have access.”

Traditional DLP was built for email, endpoints, and file transfers; AI access control must operate inside SaaS-native and AI-native workflows. The key differences are:

Without these capabilities, DLP becomes alerting noise rather than real protection.

Yes; but only if they are designed for AI-native workflows. Effective AI access control inspects prompts, uploads, and outputs regardless of whether the model is external or internal. This includes browser-based AI tools, API-driven LLM integrations, and AI features embedded inside SaaS platforms. Coverage gaps between “AI tools” and “business apps” are where most real-world leaks occur.

Modern AI access control platforms are designed to deploy in minutes, not months, especially when built with an agentless architecture. Fast deployment matters because AI adoption moves faster than security review cycles. The goal is to enforce policy invisibly; protecting sensitive data without interrupting developers, support teams, or everyday users.

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)