What is Shadow AI? The Hidden Security Risk (and How to Fix It)

Is Shadow AI putting your sensitive data at risk? Learn how to detect unmanaged AI tools, audit OAuth apps, and enforce real-time data governance.

Shadow AI is the modern evolution of "Shadow IT." It happens when employees, eager to work faster, sign up for Generative AI tools using their corporate email—or worse, personal accounts—without the knowledge or consent of the security team.

Unlike traditional software, Shadow AI poses a unique threat because of the data involved. It’s not just about an employee using an unapproved project management tool; it’s about an employee pasting proprietary code, customer PII, or financial projections into a public Large Language Model (LLM) that might retrain on that data.

The problem with Shadow AI isn't the technology itself; it's the lack of visibility. If you don't know it's there, you can't secure it.

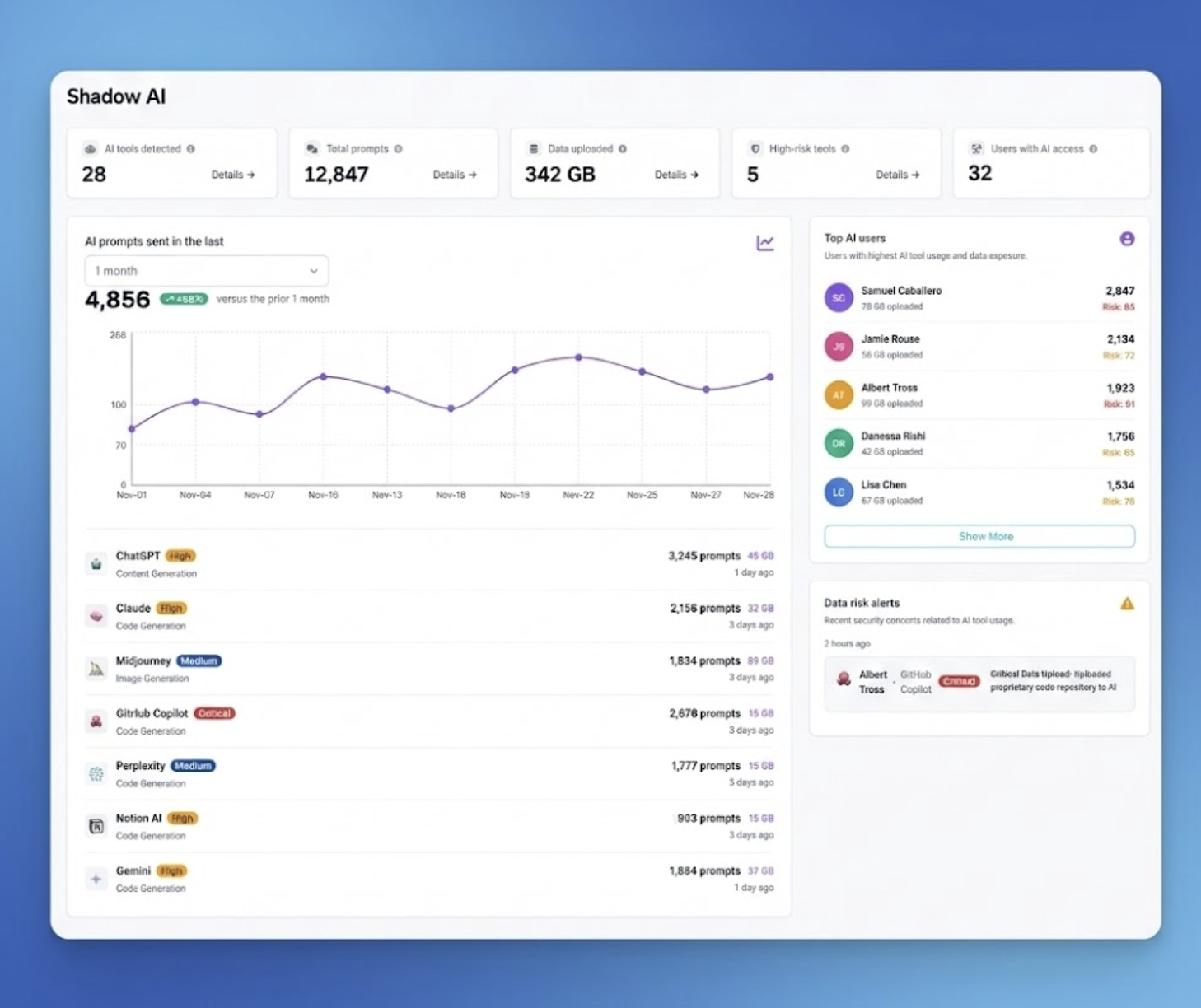

When we scan organizations for Shadow AI, we typically find 3x to 5x more AI applications than IT estimated. As seen in the dashboard below, a typical organization might have dozens of unmanaged tools—ranging from "High Risk" chatbots to "Medium Risk" image generators—consuming hundreds of gigabytes of corporate data.

The top main risks include:

1. Data Exfiltration & Model Training on Sensitive Data: The most immediate risk is that your confidential data becomes part of a public model's training set. When an employee uploads a "Customer List" or "Q4 Strategy Deck" to a free, consumer-grade AI tool, they often unwittingly grant that vendor the right to train their models on your data. Once that data is ingested, it can be nearly impossible to "unlearn," potentially exposing your trade secrets to competitors who prompt the same model.

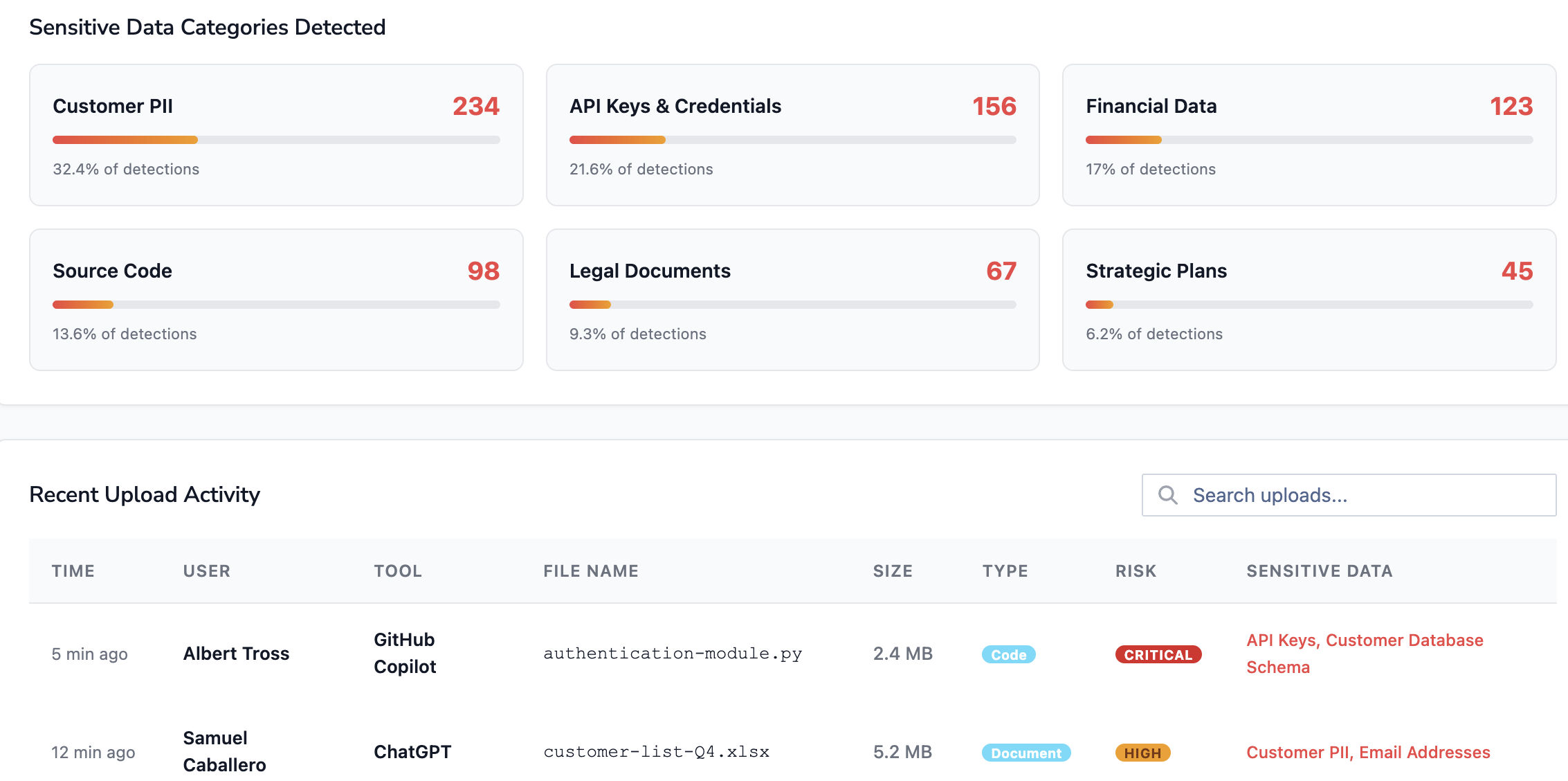

2. Exposure of API Keys & Source Code: Developers are the heaviest users of Shadow AI, often pasting entire code blocks into chatbots to debug errors or refactor legacy applications. As seen in our risk dashboard, this frequently leads to the accidental upload of Source Code containing hardcoded API Keys or Database Credentials. If the AI provider suffers a breach or leaks chat history (as seen in past incidents), your infrastructure secrets are compromised.

3. Compliance & Regulatory Violations: For regulated industries, Shadow AI is a compliance nightmare. Using unvetted tools to summarize patient notes (PHI) or analyze loan applications (PCI) violates strict data residency and privacy laws like HIPAA, GDPR, and GLBA. Shadow AI tools rarely have the required Business Associate Agreements (BAAs) or encryption standards in place, leaving your organization open to massive fines.

4. Unvetted OAuth Permissions (The "Connected App" Risk): Shadow AI isn't just about what users type; it's about what they connect. Employees frequently authorize AI assistants to access their corporate Gmail, Google Drive, Slack, or Jira accounts via OAuth. These third-party apps often request excessive permissions (like "Full Read/Write" access) that persist long after the user stops using the tool, creating a permanent backdoor into your environment.

5. Lack of Audit Trails & Incident Response Blind Spots: You cannot react to an incident you didn't see. Because Shadow AI traffic is encrypted (HTTPS) and occurs on non-corporate accounts, security teams have zero visibility into what happened during a breach. Without a centralized audit trail of who used which tool and what data was uploaded, your incident response team is flying blind, unable to assess the blast radius of a data leak.

Most legacy security tools (Firewalls, CASBs) struggle to detect Shadow AI because the traffic looks like standard web browsing (HTTPS).

To accurately find Shadow AI, you need to look at the identity layer. By integrating with Google Workspace or Microsoft 365 Admin APIs, you can audit "Sign in with Google" events. This reveals exactly which third-party applications your users are authorizing. This method provides instant visibility into the "who, what, and when" of AI usage without requiring heavy network appliances.

Finding the apps is only step one. Step two is stopping the data leak. A robust Shadow AI strategy requires moving from "Discovery" to "Governance."

You need to know what is being sent to these tools. Are your developers pasting source code? Is HR pasting resumes? As shown below, granular governance allows you to categorize these detections (e.g., "Source Code," "Customer PII") and block them in real-time before they leave the browser.

Shadow AI isn't just about chat interfaces; it's also about the "connected apps" ecosystem.

Employees often grant AI tools permission to read their email, calendar, or Google Drive via OAuth (Open Authorization). A "Note Taking AI" might request full read/write access to your CEO’s inbox. If that AI startup gets breached, the attackers have a direct line into your corporate data. Managing Shadow AI means auditing these OAuth scopes and revoking access for apps that demand excessive permissions.

You can try, but it’s a game of whack-a-mole. New AI tools launch every day (there are over 10,000+ AI apps). Plus, blocking tools like ChatGPT often hinders productivity, leading employees to find more dangerous workarounds (like using personal devices).

No! Shadow AI proves that your employees are innovative and want to work faster. The goal shouldn't be to punish them, but to "nudge" them toward approved, enterprise-ready versions of these tools where data is private.

You can detect Shadow AI in near real-time

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)