Generative AI Data Security: Governance, DSPM & Runtime Controls

Learn how to secure and govern generative AI data using AI-native DSPM, real-time DLP, and enforceable AI data governance frameworks.

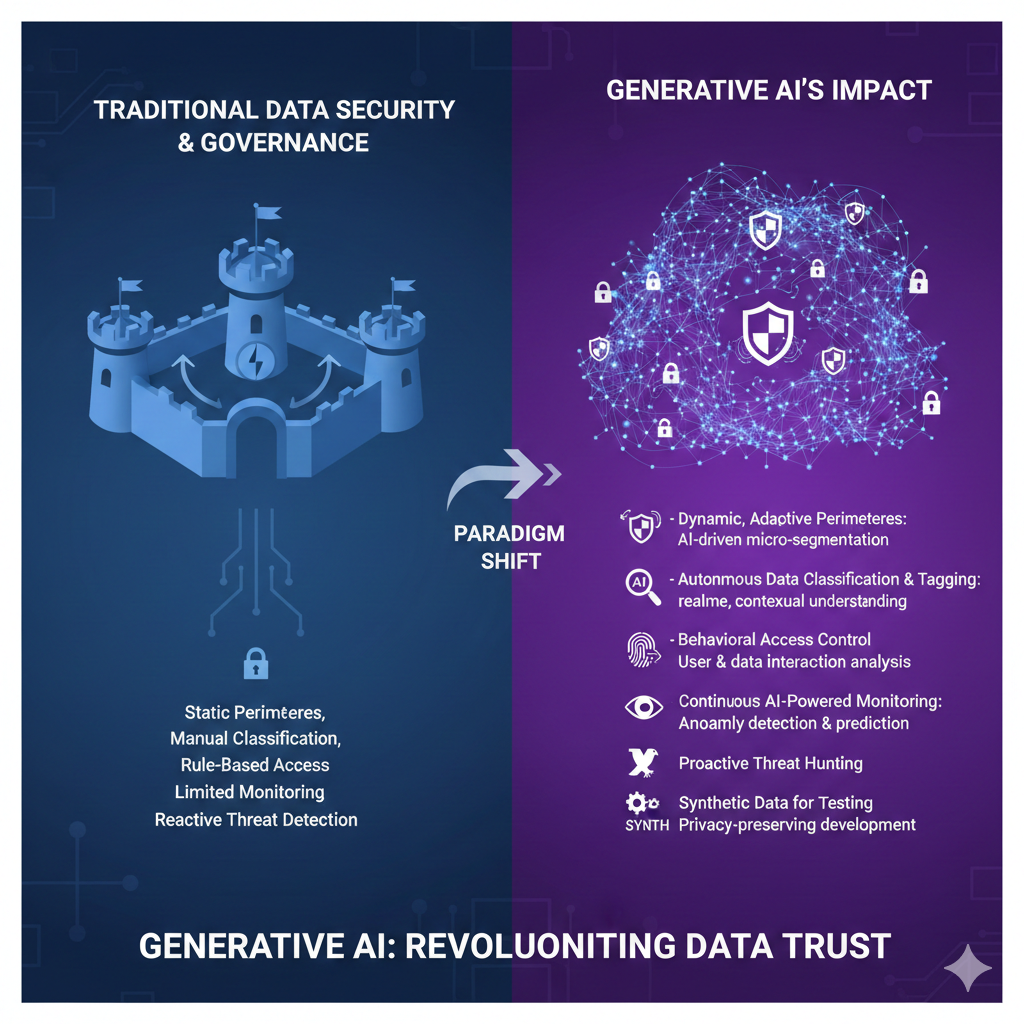

Generative AI Data Security has quickly become a board-level concern as organizations move from experimental AI usage to production deployment across everyday workflows. Security and compliance leaders are no longer asking whether generative AI introduces risk; they are asking how to govern AI data usage, prevent sensitive information from entering models, and enforce controls without slowing teams down. Prompts, uploads, embeddings, and AI-generated outputs now sit directly in the path of regulated data, intellectual property, and internal communications, creating exposure that traditional data security models were never designed to handle. This shift demands a new, architecture-first approach that treats governance, visibility, and runtime enforcement as a single, operational system rather than disconnected tools or policy documents.

Generative AI data security challenges traditional assumptions because AI systems do not simply store or transmit information; they continuously process, transform, and reassemble data in real time. Large language models ingest prompts, contextual memory, uploaded files, and external data sources, then generate new outputs that may contain fragments of sensitive information. This dynamic behavior breaks the static trust boundaries that legacy data security and governance models were built around, creating new forms of AI data exposure that are harder to predict and control.

At the core of this shift is how data enters and moves through AI workflows. In generative AI systems, sensitive information is often embedded directly into prompts or contextual windows rather than residing in clearly defined files or databases. Once data is embedded inside a prompt or passed into an LLM context, traditional ai data governance controls lose visibility. There is no “file to scan” or “record to classify” in the conventional sense, yet the data is still actively being processed and transformed, increasing overall LLM data risk.

Several foundational governance assumptions break down in this environment:

Despite these shifts, accountability does not disappear simply because AI generates the output. Organizations remain responsible for how personal data, regulated information, and intellectual property are handled; regardless of whether the content was typed by an employee or generated by a model. From a governance standpoint, this means ownership, auditability, and enforcement still apply, but they must now extend into AI-driven interactions.

This is why generative AI data security cannot be addressed with policy documents alone. Effective AI data governance requires visibility into how data flows into and out of AI systems, combined with enforceable controls that operate at runtime. Without this operational layer, organizations may have governance frameworks on paper while remaining blind to real-world AI data exposure.

Generative AI data security risks become real when abstract governance concerns are mapped to how AI is actually used day to day. Unlike traditional applications, generative AI introduces new data exposure paths that bypass familiar security boundaries and operate outside legacy inspection points. These paths are operational, frequent, and often invisible unless AI data governance and enforcement are designed specifically for LLM-driven workflows.

Prompts have quietly become one of the largest sources of AI data exposure across organizations. Employees regularly paste sensitive information directly into chat-based AI tools while seeking faster answers or automation support. This includes personally identifiable information, protected health information, source code, internal strategy documents, API keys, and credentials.

The challenge from a generative ai data security perspective is that prompts do not resemble traditional data objects. There is no file boundary, no attachment, and no clear perimeter to inspect. Legacy DLP and governance tools were built to monitor emails, files, and databases; they struggle to see or control data once it is embedded inside an LLM prompt. As a result, ai data governance policies may exist, but enforcement fails at the exact moment sensitive data is introduced into the AI system.

Beyond prompts, generative AI platforms increasingly support file uploads and extended context windows. Users attach spreadsheets, PDFs, support tickets, internal documentation, and customer records to provide richer context for AI-generated responses. While this improves productivity, it significantly increases llm data risk.

Once uploaded, sensitive data is absorbed into the model’s working context rather than remaining in a clearly governed repository. Traditional governance tools often lose visibility at this point, especially when uploads are processed inline by AI services. From an ai data exposure standpoint, this creates a blind spot where regulated or proprietary data is actively used by AI systems without consistent discovery, classification, or runtime control.

Data exposure does not stop at ingestion. AI-generated outputs introduce downstream risk that many governance models overlook. Generated responses may contain sensitive information inferred from prompts or context, and those responses are frequently copied, reused, shared internally, or sent to external systems.

This creates a secondary exposure path where AI outputs propagate beyond their original context. Governance that focuses only on preventing sensitive data from entering AI systems is incomplete. Effective generative ai data security must also account for how AI outputs are handled, monitored, and controlled after generation. Without extending governance beyond ingestion, organizations remain exposed even if initial prompt controls are in place.

Together, these exposure paths illustrate why generative AI fundamentally reshapes ai data governance requirements. Visibility, enforcement, and accountability must span prompts, uploads, context, and outputs to meaningfully reduce AI-driven data exposure.

Generative AI data security makes the limitations of legacy controls visible very quickly. Traditional DLP and policy-only AI governance were built for stable data paths and human-paced workflows; generative AI introduces dynamic, machine-speed data flows that operate outside those assumptions. To understand why these approaches fail, it helps to break the gaps down operationally.

Taken together, these gaps explain why traditional approaches struggle in AI environments. Generative AI data security demands a shift from reactive detection and policy guidance to enforceable, runtime controls that operate directly within AI workflows.

Generative AI data security cannot be achieved through policy alignment alone. Effective governance in AI environments must operate as a living control system that combines visibility, decision-making, and enforcement across real-world AI usage. This requires moving beyond static governance models toward an operational framework that can adapt to how data actually flows through LLM-powered workflows.

Strong generative ai data governance starts with understanding what data is even eligible to enter AI systems. Without this foundation, enforcement decisions are guesswork rather than control.

This level of visibility shifts governance from abstract policy definitions to practical awareness of real AI data exposure paths.

Visibility alone does not reduce risk. Governance only becomes effective when it is enforceable at the moment AI interactions occur. Runtime control is the point where generative ai data security either succeeds or fails.

This approach transforms ai data governance from advisory guidance into an active control layer that protects data during live AI usage.

Generative AI does not operate in isolation; it is deeply embedded into existing SaaS workflows. Effective governance must reflect this reality by unifying control across environments.

By centralizing governance, organizations avoid fragmented controls and policy drift. Generative AI data security becomes scalable, auditable, and enforceable across both AI and SaaS ecosystems; without slowing innovation or productivity.

Generative AI data security depends on more than controlling AI interactions in isolation. It requires a clear understanding of the organization’s underlying data posture, how sensitive data is distributed across systems, and how that data can realistically flow into AI workflows. DSPM, generative AI usage, and governance form a dependency chain; if one layer is missing, the entire security model weakens.

DSPM for AI provides the visibility foundation that effective AI data security requires. It identifies where sensitive data lives across SaaS applications, cloud storage, data warehouses, and collaboration platforms; long before that data is introduced into an AI prompt or uploaded to an LLM.

Without AI data posture management, organizations lack clarity on which datasets are sensitive, overexposed, or poorly governed. This creates blind spots where data can unintentionally flow into AI systems. DSPM closes that gap by mapping sensitive data locations, access patterns, and exposure levels, turning AI governance decisions into informed, risk-based actions rather than assumptions.

Generative AI does not create data sprawl; it accelerates and exposes it. Data that previously sat idle in documents, tickets, spreadsheets, or internal tools can instantly become active input through prompts, uploads, and context windows. This amplification effect increases ai data visibility requirements because AI systems pull from across the entire data estate, not from a single controlled repository.

In this environment, generative AI data security is only as strong as the organization’s weakest data posture. DSPM highlights where AI-driven workflows are most likely to introduce risk, allowing teams to prioritize controls based on real exposure rather than hypothetical scenarios.

AI data governance defines how data should be used, but DSPM determines whether that governance can realistically be enforced. Governance frameworks without posture awareness assume that data is already well understood and controlled; in practice, this is rarely the case.

When DSPM insights are connected to governance and runtime enforcement, governance becomes operational. Policies can be aligned with actual data locations, access paths, and exposure levels, enabling enforceable controls across AI and SaaS environments. In this way, DSPM does not sit alongside governance; it makes governance actionable within generative AI data security architectures.

Generative AI data security becomes enforceable only when governance is embedded directly into how AI is used day to day. In production environments, this means applying controls at each stage of the AI interaction lifecycle; from prompt creation to output reuse; so governance is not theoretical but operational.

The first and most critical enforcement point is the prompt itself. Before a prompt is sent to ChatGPT or another generative AI system, it must be inspected in real time for sensitive data such as PII, PHI, credentials, intellectual property, or regulated information. When risk is detected, governance must trigger inline actions; redacting sensitive fields, masking values, or blocking the prompt entirely. This step ensures AI data exposure is prevented at the moment it would otherwise occur, not merely logged after the fact.

Generative AI platforms increasingly accept file uploads to enrich context. Spreadsheets, PDFs, support tickets, and internal documents often contain high-risk data. Effective generative ai data security requires scanning and classifying these files as they are uploaded, applying governance controls before the data is absorbed into the model’s context window. Without this enforcement point, sensitive data becomes invisible to legacy tools once processed by the AI system.

Governance does not stop when the model responds. AI-generated outputs may include sensitive information inferred from prompts or contextual inputs, and those outputs are frequently copied, reused, or shared across SaaS tools. Output monitoring ensures that governance policies extend beyond ingestion, reducing downstream risk and preventing uncontrolled propagation of sensitive AI-generated content.

Enforceable governance requires evidence. Detailed audit logs must capture prompt inspections, enforcement actions, file handling decisions, and output monitoring events. These records provide accountability for security teams and defensibility during compliance reviews, turning AI governance into a measurable, auditable capability rather than an assumed one.

Together, these enforcement layers demonstrate what real governance looks like in practice. By inspecting, controlling, and auditing AI interactions at runtime, organizations can secure ChatGPT and generative AI usage without slowing teams down, while maintaining clear accountability for how AI data is handled across the organization.

Generative AI data security does not exist outside established regulatory frameworks. While AI systems introduce new data flows and technical complexity, they do not change the fundamental compliance obligations organizations already carry. Regulations such as GDPR, HIPAA, and PCI continue to apply to AI inputs, contextual data, and generated outputs; regardless of whether the data is processed by a human or an AI system.

From a compliance perspective, AI does not eliminate data controller responsibility. Organizations remain accountable for how personal data, regulated information, and sensitive records are collected, processed, and shared. If an employee submits protected data to a generative AI tool, or if an AI-generated response exposes regulated information, responsibility still sits with the organization. This makes generative ai data security a governance problem as much as a technical one, requiring clear ownership and enforceable controls across AI usage.

Regulators increasingly expect evidence, not intent. Policies and training programs may demonstrate awareness, but they do not satisfy audit requirements on their own. Auditable enforcement is critical for demonstrating compliance in AI-driven workflows. Security teams must be able to show when prompts were inspected, how sensitive data was handled, what enforcement actions were taken, and how AI outputs were governed. Without this level of traceability, organizations struggle to defend their AI practices during regulatory reviews.

In practice, compliance in generative AI environments depends on operational controls that align governance with enforcement. By combining runtime inspection, remediation, and detailed audit logs, organizations can demonstrate that AI data usage is governed, monitored, and accountable. This approach transforms compliance from a documentation exercise into a defensible, measurable component of generative AI data security.

Generative AI fundamentally changes how data security platforms must be evaluated. Legacy DLP checklists were designed for static files, fixed perimeters, and delayed inspection; AI risk now occurs at runtime, across prompts, uploads, and generated outputs, inside SaaS-native workflows. In this environment, visibility without enforcement and policy without execution are insufficient. Strac was built for this reality by unifying AI-native discovery, enforceable governance, DSPM, and real-time DLP within a single control plane that operates where AI is actually used.

A modern generative AI data security platform must discover sensitive data based on how it is likely to be used in AI workflows, not only where it is stored. This includes SaaS applications, support tickets, shared documents, internal datasets, and files that users routinely paste into prompts or upload to models. Strac’s DSPM capabilities surface sensitive data across SaaS and cloud environments, allowing AI governance decisions to be grounded in real exposure rather than assumptions. This matters because governance cannot control what enters AI systems if sensitive data locations are unknown or poorly understood.

AI data governance must translate intent into action. Documentation and training define expectations, but effective platforms convert governance rules into technical controls that execute automatically during AI interactions. Strac operationalizes AI data governance by enforcing policies at runtime, ensuring sensitive data does not enter generative AI systems in violation of organizational or regulatory requirements. This matters because governance that cannot be enforced does not meaningfully reduce risk and does not stand up during audits.

AI risk manifests at the moment data is submitted to or generated by a model. Effective platforms must inspect prompts before submission, scan files uploaded to AI tools, and monitor AI-generated outputs that may contain sensitive information. Strac applies real-time inspection and remediation across these surfaces, enabling redaction, masking, blocking, or removal before exposure occurs. This matters because alert-only approaches notify teams after the leak has already happened.

Detection alone does not scale in AI environments. Platforms must actively reduce risk by remediating sensitive data inline, without relying on manual intervention. Strac performs real-time redaction and automated remediation across AI and SaaS workflows, protecting data while preserving productivity. This matters because automation is the only sustainable way to secure high-velocity, AI-driven workflows.

Generative AI does not operate in isolation; sensitive data flows from SaaS tools into AI systems and back again. Platforms must apply consistent policies across both environments. Strac secures data across ChatGPT, SaaS applications, and cloud environments under one control plane, preventing policy fragmentation. This matters because disjointed controls create blind spots and inconsistent enforcement.

DSPM provides posture awareness; AI DLP enforces controls on how that data moves into AI systems. These capabilities must work together. Strac unifies DSPM and AI DLP so organizations can discover sensitive data, assess exposure, and enforce controls without stitching together multiple tools. This matters because AI amplifies existing data sprawl; posture awareness is essential for effective governance.

Security teams must consider how quickly a platform can be deployed and scaled without operational friction. Strac’s agentless architecture enables rapid rollout across SaaS and AI workflows with minimal configuration and no endpoint agents. This matters because AI adoption moves faster than traditional security rollouts.

AI data security platforms must provide clear audit trails showing what data was inspected, what actions were taken, and how policies were enforced. Strac delivers detailed logging and reporting aligned with GDPR, HIPAA, PCI DSS, and SOC 2, including AI-specific enforcement evidence. This matters because regulators and auditors require proof, not intent.

AI security controls must integrate with existing SIEM and SOAR workflows to avoid creating isolated silos. Strac integrates with broader security operations tooling so AI data security events feed directly into monitoring and incident response. This matters because AI security must function as part of the wider security ecosystem.

Taken together, these capabilities explain why Strac meets modern evaluation criteria for generative AI data security. It was designed for runtime enforcement, unified governance, and AI-native data flows; not retrofitted from legacy DLP models.

Generative AI data security is no longer optional. As AI systems become embedded in everyday workflows, organizations must move beyond policy-only AI governance toward enforceable, runtime controls that actively prevent sensitive data from entering generative AI systems. Visibility, governance, and enforcement must operate together; across prompts, uploads, and outputs; to meaningfully reduce risk.

The organizations that succeed will be those that treat AI data security as an operational capability, not a documentation exercise. By implementing real-time inspection, automated remediation, and audit-ready governance across AI and SaaS environments, teams can secure generative AI usage while preserving the speed and productivity that drove adoption in the first place.

Generative AI data security focuses on preventing sensitive information from being exposed through generative AI systems during real usage. It addresses how data flows into AI tools through prompts, file uploads, and context windows, and how it leaves those systems through generated outputs. Because exposure happens in real time, generative AI data security must operate inline rather than relying on delayed detection or after-the-fact review.

In practice, it combines visibility, governance, and enforcement into a single operational approach that can inspect and control AI interactions as they happen.

AI data governance defines how AI tools can be used, what data is allowed to enter them, and how usage is monitored and audited across the organization. It matters because AI does not remove regulatory or accountability obligations; organizations remain responsible for how personal data, regulated information, and intellectual property are processed.

Effective AI data governance includes:

Without enforcement, governance exists only on paper and does not reduce real AI data exposure.

Traditional DLP tools were designed for predictable data paths and static boundaries such as email, file transfers, and endpoints. ChatGPT changes the exposure surface by embedding data directly into text-based prompts and contextual memory.

Traditional DLP fails in this context because:

For ChatGPT, risk occurs at submission time, which is why AI-native, runtime enforcement is required.

DSPM supports AI data governance by providing visibility into where sensitive data actually resides across SaaS and cloud environments. This visibility is critical because AI systems pull context from across the organization, not from a single controlled repository.

DSPM enables governance by:

Without DSPM, AI governance decisions are based on assumptions rather than real data exposure.

Deployment timelines vary by organization, but modern AI data security platforms are designed to be deployed quickly without disrupting productivity. SaaS-native, agentless architectures significantly reduce rollout time compared to legacy security tools.

A typical rollout follows a staged approach:

This approach allows teams to achieve meaningful protection early while expanding coverage incrementally as AI usage grows.

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)