What is DSPM for AI

Learn how DSPM applies to AI workflows; from training data and prompts to LLM outputs; and why enforcement is critical for AI security.

DSPM for AI is becoming critical because AI fundamentally changes how sensitive data moves inside a company. With AI embedded into everyday workflows, data no longer stays neatly inside apps, databases, or cloud storage. Instead, employees paste customer records into prompts, models pull context from internal documents, and AI generates new outputs in real time.

This breaks traditional security assumptions. Protecting databases and SaaS tools alone is no longer enough, because the riskiest moments now happen when data enters and flows through AI systems. Once data is used by a model, it can be transformed and reused in ways that file- or system-based security tools cannot see.

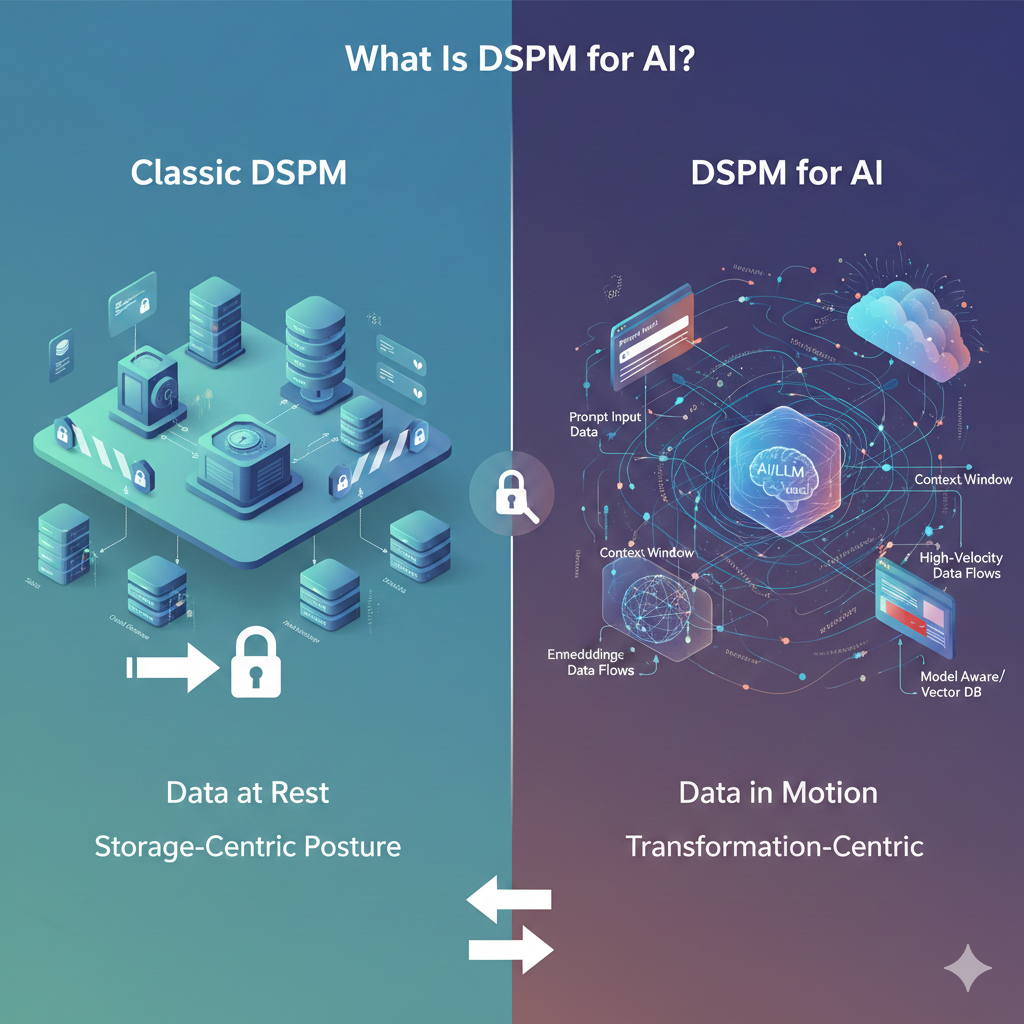

Traditional DSPM focuses on where data is stored and who can access it. In AI workflows, the highest risk shows up earlier; during prompts, context building, and data transformation. Securing AI at scale means extending DSPM beyond storage to cover these fast, temporary AI data flows, where visibility alone is not enough to reduce risk.

DSPM for AI applies data security posture management to AI and LLM systems, not just to SaaS apps or cloud storage. It focuses on how sensitive data flows through AI, not only where it is stored.

In practice, DSPM for AI provides visibility into:

The key difference from traditional DSPM is how data behaves. Classic DSPM assumes data is stored in known systems and controlled by permissions. DSPM for AI must handle fast, temporary data flows where sensitive data may never be saved but can still be exposed. This shifts DSPM from a storage-centric model to a flow-aware approach that reflects how AI systems work in production.

DSPM for AI starts with one job; find the data that can realistically end up in prompts, uploads, or retrieval pipelines. AI exposure is usually upstream; SaaS, cloud stores, and human copy-paste behaviors. If DSPM cannot map those sources, your “AI posture” is guesswork.

Where DSPM discovers AI-exposed data most often:

Bottom line; DSPM discovery is about where AI can pull from, not just where data “lives.”

DSPM for AI only works if it covers the data types AI workflows actually touch. If you only scan tables and files, you miss the real AI leakage surfaces; runtime inputs, derived artifacts, and secondary storage.

AI data types DSPM must cover:

Spicy take; most “DSPM for AI” claims fall apart because they never model prompts and embeddings as first-class risk surfaces.

DSPM answers “where is sensitive data?” That is necessary; it is not protective. AI leakage happens at runtime; prompt submit, file upload, context retrieval, output share. By the time an alert fires, the data already crossed the boundary.

This is the boundary:

If you want risk reduction, you need controls that act inline; not reports after the fact. DSPM without enforcement is visibility; not security.

AI security has to follow the data flow; not the org chart. The model is simple; discover what can feed AI, classify it, then enforce before ingestion. This is how you move from “we know where risk is” to “we stop leakage.”

Buyer-grade flow:

If you can’t enforce before ingestion, you don’t control AI data; you only observe it.

DSPM for AI is foundational, but it is not sufficient on its own to secure AI systems in production. DSPM establishes visibility into where sensitive data exists and how it is exposed across SaaS, cloud, and data repositories. That visibility is essential, but AI introduces a dynamic runtime layer where risk is realized instantly; long after discovery is complete. This is where AI security posture management becomes necessary.

DSPM answers posture questions at rest and over time; where is sensitive data, who can access it, and how exposed is it? AI security posture management extends that foundation into live AI workflows, where data is actively submitted, transformed, and generated. Rather than replacing DSPM, it builds on it to deliver enforceable control over how AI systems actually use data.

AI security posture management extends DSPM for AI by adding four critical capabilities:

AI interactions must be governed at the moment they occur. This includes inspecting prompts, file uploads, and contextual inputs as they are sent to models, not after the fact. Runtime controls ensure posture awareness is applied where AI risk materializes.

Visibility alone cannot stop AI data leakage. AI security posture management introduces inline enforcement; redacting, masking, blocking, or modifying data before it reaches a model. This transforms DSPM insights into preventative action.

When sensitive data is detected in AI workflows, automated remediation is required to reduce risk immediately. This includes removing sensitive content from prompts, preventing unsafe outputs, and correcting policy violations without slowing teams down.

AI environments change rapidly. New tools, new models, and new usage patterns emerge constantly. AI security posture management continuously reassesses risk across AI workflows, ensuring governance adapts as AI usage evolves.

In practical terms, DSPM establishes what could go wrong, while AI security posture management governs what is allowed to happen. Platforms like Strac operationalize this transition by combining DSPM visibility with real-time enforcement and remediation across AI, SaaS, and cloud environments. This unified approach enables organizations to move from static AI governance policies to continuously enforced AI security posture management that scales with real-world AI adoption.

As AI systems move into regulated workflows, visibility alone is no longer enough for compliance. Regulators are not asking where data exists; they are asking how AI use is controlled, enforced, and auditable at the moment of risk.

DSPM for AI provides the visibility layer. Compliance readiness requires enforcement and traceability on top of it.

What regulators now expect to see in AI workflows:

AI compliance pressure shows up differently by framework, but the control expectations are consistent.

Across frameworks, auditors are converging on the same requirement: a provable chain of control.

DSPM for AI establishes where compliance risk exists. Enforcement and auditability prove that AI usage is actually governed.

Spicy take; AI compliance fails when teams mistake posture reports for protection. Regulators care about what happened at runtime; not what your dashboard said last week.

Extending DSPM for AI into real protection requires moving from visibility to control without fragmenting the security stack. Strac is built to close that gap by combining DSPM and AI DLP into a single, enforceable AI security posture management layer. Rather than adding another point tool, Strac operationalizes posture insights directly inside AI workflows where risk actually occurs.

Strac unifies data discovery, classification, and posture assessment with real-time AI DLP enforcement. This ensures DSPM insights do not stop at dashboards, but directly inform what AI interactions are allowed, modified, or blocked.

Strac discovers sensitive data across SaaS apps, cloud storage, and repositories commonly used to feed AI systems. This discovery is AI-contextual; focused on data that is likely to appear in prompts, uploads, or retrieval pipelines, not just data at rest.

Prompts, contextual inputs, and uploaded files are inspected in real time as they are submitted to AI tools. This allows security controls to operate at the moment AI risk materializes, rather than after exposure has already occurred.

When sensitive data is detected, Strac enforces policy inline by redacting, masking, or blocking content before model ingestion. This transforms AI security from alert-driven response to proactive prevention of AI data leakage.

Strac’s agentless architecture enables rapid rollout without endpoint agents or workflow disruption. Security teams can extend AI security posture management across SaaS and AI tools quickly, even in dynamic environments.

Policies, posture visibility, enforcement actions, and audit logs are managed from a single control plane. This creates consistent AI governance across traditional SaaS workflows and modern AI interactions, reducing operational complexity.

Together, these capabilities turn DSPM from a foundational visibility layer into an enforceable AI security posture management system; one that reflects how AI is actually used in production and prevents data exposure before it happens.

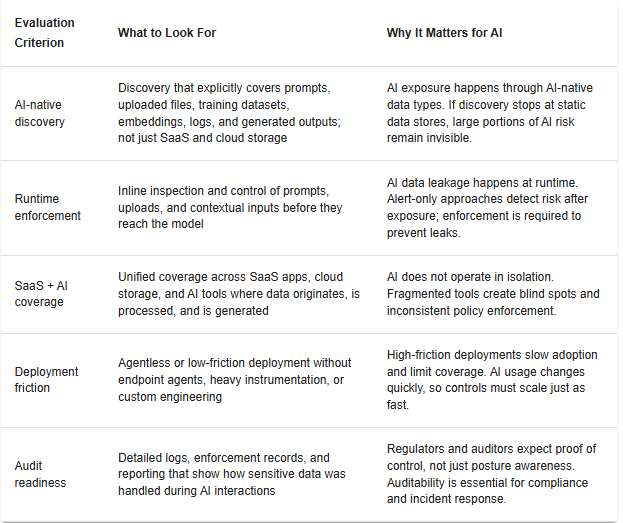

As organizations move from experimentation to production AI, evaluating DSPM for AI requires a different lens than traditional DSPM buying decisions. Buyers at this stage already understand the risks; the key question is whether a solution can realistically secure AI data flows without breaking productivity or creating operational drag. The criteria below are designed to help security leaders assess whether a platform can move beyond visibility and support enforceable AI governance at scale.

A DSPM for AI solution must explicitly understand AI data paths, not just traditional SaaS and cloud storage. This includes discovering sensitive data likely to appear in prompts, uploaded files, training datasets, embeddings, and generated outputs. If discovery is limited to static data stores, AI exposure will remain partially invisible.

AI risk occurs at runtime, not during scheduled scans. Buyers should validate whether the platform can inspect and control prompts, uploads, and contextual inputs before they reach a model. Alert-only approaches signal risk but do not prevent AI data leakage, making runtime enforcement a non-negotiable capability.

AI does not operate in isolation. Effective DSPM for AI must span the full ecosystem; SaaS applications where data originates, cloud storage used for training or retrieval, and AI tools where data is consumed and generated. Fragmented coverage increases blind spots and policy inconsistency.

High-friction deployments slow adoption and limit coverage. Buyers should assess whether the solution requires endpoint agents, custom instrumentation, or extensive engineering effort. Agentless or low-friction architectures are better suited for fast-moving AI environments where usage patterns change rapidly.

AI governance increasingly intersects with regulatory and internal audit requirements. A DSPM for AI solution should provide detailed logs, enforcement records, and reporting that demonstrate how sensitive data was handled in AI workflows. This is critical for compliance reviews, incident response, and ongoing posture assessment.

When evaluated through these criteria, DSPM for AI becomes less about static posture reporting and more about operational control. Solutions that combine AI-native discovery with runtime enforcement and unified coverage are best positioned to support secure AI adoption without slowing innovation.

DSPM for AI is a necessary starting point, but it is not enough on its own. Visibility into where sensitive data exists and how it is exposed is foundational; however, AI systems introduce runtime risk that posture management alone cannot control. In AI environments, the most damaging data leaks occur in the moment data is submitted, transformed, or generated; long after discovery is complete.

Effective AI security requires enforcement. Without inline inspection, redaction, and blocking, organizations are left reacting to alerts instead of preventing AI data leakage. DSPM answers where sensitive data lives, but AI security demands controls that determine whether that data can be used, shared, or transformed right now.

The future of AI data protection is a unified model. DSPM + AI DLP, delivered through a single AI security posture management layer, connects discovery with real-time enforcement and auditability. This convergence allows organizations to scale AI safely; maintaining visibility, control, and compliance as AI becomes embedded across every business workflow.

DSPM for AI is the application of data security posture management to AI and LLM-driven systems. It focuses on discovering and understanding sensitive data exposure across AI-specific surfaces such as training data, prompts, context windows, embeddings, logs, and generated outputs. The purpose is to give security teams clear visibility into how sensitive data could be introduced into or exposed by AI systems, forming the foundation for governance and control.

DSPM for AI expands posture management from static storage environments into dynamic, runtime AI workflows. Key differences include:

These differences make DSPM for AI inherently more dynamic and closely tied to enforcement than traditional DSPM.

DSPM alone cannot prevent AI data leaks. It identifies where sensitive data exists and which users or systems can access it, but AI leaks occur at runtime when data is submitted to or generated by a model. Preventing leaks in ChatGPT and copilots requires inline inspection and enforcement; such as redaction, masking, or blocking; before data reaches the model. DSPM provides the necessary context, but enforcement is what actually stops AI data leakage.

Yes, especially when combined with enforcement and auditability. DSPM for AI supports compliance by:

For GDPR or HIPAA readiness, regulators also expect evidence that controls are enforced during AI usage; not just visibility reports; which is why DSPM is most effective when paired with runtime controls.

Deployment timelines vary based on environment complexity, but most teams follow a phased approach. Initial rollout typically starts with connecting core SaaS and cloud data sources for discovery, followed by expanding coverage to AI tools and enforcement for high-risk workflows. Solutions that rely on heavy agents or custom engineering take longer to deploy and scale, while low-friction, agentless approaches generally reduce time-to-value and accelerate coverage across AI environments.

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)