Top 5 AI Data Security Companies

A practical buyer’s guide to AI data security companies; how to evaluate governance, DSPM, and AI DLP capabilities.

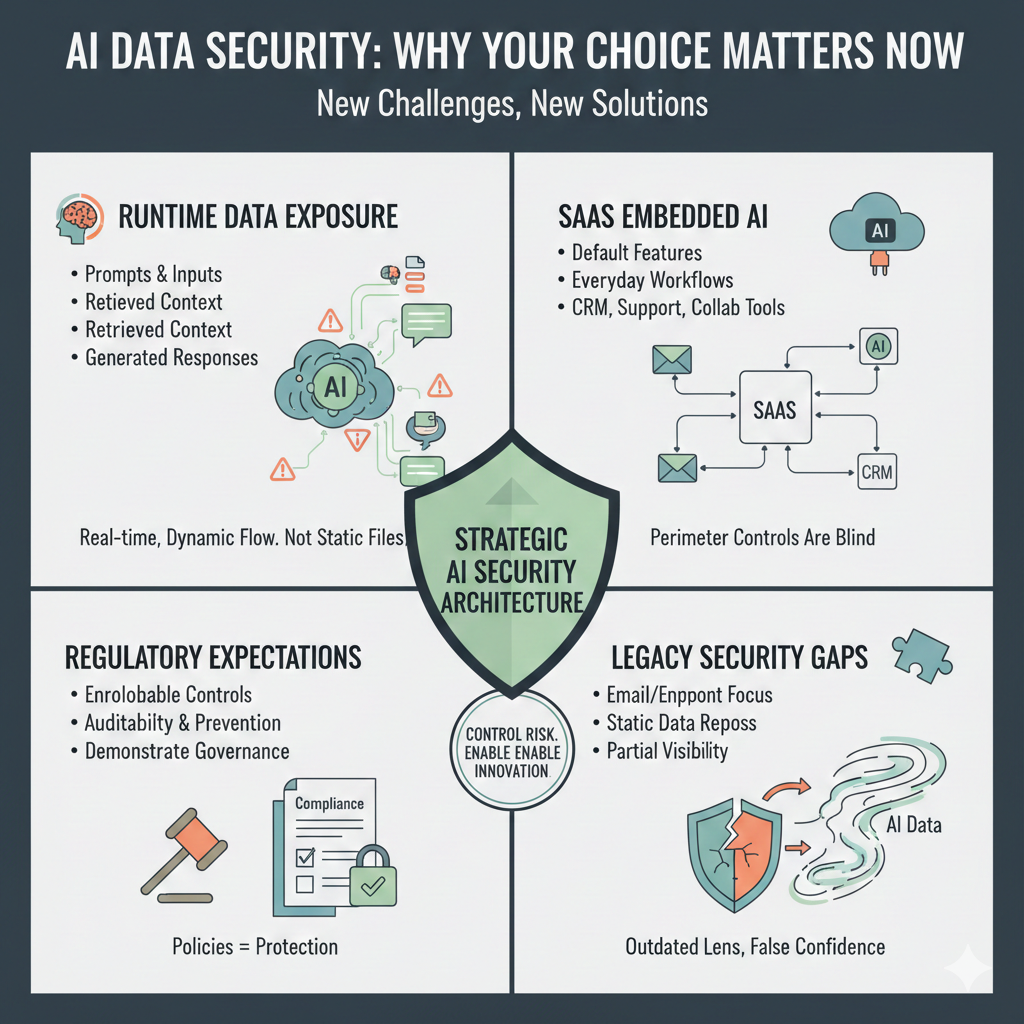

Generative AI has fundamentally changed how sensitive data moves inside organizations. Data now flows through prompts, copilots, SaaS-embedded AI features, and AI-generated outputs; often outside traditional security controls and inspection points. As a result, choosing the wrong type of AI data security company can leave critical gaps that are invisible until an incident occurs. Vendor selection has therefore become a strategic security decision; not a simple tooling exercise.

AI adoption has moved faster than most security architectures were designed to handle. Sensitive data is no longer exposed only through files, databases, or outbound emails; it now flows dynamically through prompts, copilots, embedded SaaS AI features, and generated outputs. In this environment, the choice of an AI data security company directly determines whether risk is actually controlled or merely documented. Buyers who approach this decision using legacy security categories often discover gaps only after AI usage is already widespread.

Ultimately, choosing the right AI data security company is about aligning security controls with how data actually moves today. Organizations that treat this as a strategic architecture decision are far better positioned to manage AI risk without slowing innovation.

The term “AI data security companies” is widely used, but it is poorly defined in practice. Vendors ranking for this keyword often address very different layers of risk, which creates confusion during evaluation and leads buyers to compare tools that were never designed to solve the same problem. To make informed decisions, security leaders need a clear taxonomy that distinguishes how different types of vendors approach AI-related data exposure. The sections below break down the three most common categories buyers encounter.

AI model and application security companies focus primarily on protecting the AI system itself rather than the enterprise data flowing through it. These platforms are often rooted in application security or developer tooling and are designed to help teams harden AI-powered applications against misuse and external threats. While valuable in certain scenarios, they typically sit upstream or downstream from enterprise data controls.

As a result, this category addresses AI reliability and application safety more than enterprise-wide data protection.

AI data governance and DSPM companies focus on understanding where sensitive data lives and how it could be exposed as organizations adopt AI. These platforms form the foundation for AI data security by mapping risk, ownership, and access across SaaS and cloud environments. Their strength lies in visibility and context rather than real-time control.

While essential, governance and DSPM alone do not stop data from being shared with AI systems at the moment of use.

AI DLP companies focus on enforcement at the point where AI risk actually materializes. These platforms are designed to inspect and control data in motion as it enters and exits AI systems, rather than relying solely on after-the-fact alerts or audits. Their value becomes most apparent once AI usage is already embedded in daily workflows.

This category directly addresses the operational reality of generative AI usage inside enterprises.

Modern enterprises increasingly require governance, discovery, and enforcement to work together as a single strategy rather than as isolated point tools. As AI becomes embedded across SaaS platforms and everyday workflows, effective AI data security depends on aligning visibility with real-time control. Buyers who understand this taxonomy are far better equipped to choose an AI data security company that matches their actual risk surface.

Evaluating AI data security companies requires moving beyond traditional feature checklists that were designed for pre-AI data flows. In modern environments, sensitive data moves dynamically through prompts, copilots, SaaS-embedded AI features, and generated outputs; often at speeds and volumes that legacy controls were never built to handle. The central question buyers must answer is whether a solution can actually reduce AI-driven data risk in real production environments without disrupting productivity or slowing adoption. The criteria below reflect what matters most when AI usage is already embedded across the organization.

Taken together, these capabilities help distinguish AI data security companies that merely document risk from those that actively control it. Buyers who evaluate vendors through this lens are far more likely to select a solution that scales with AI adoption rather than becoming obsolete as usage grows.

There is no universal “best” AI data security company. The right choice depends on how AI is actually used inside your organization and where sensitive data intersects with those workflows. Security leaders who start with internal usage patterns rather than vendor features are far more likely to select a solution that delivers real risk reduction. The scenarios below outline common AI adoption patterns and the capabilities that matter most in each case.

When employees rely on conversational AI and copilots as part of everyday work, runtime controls become essential. The most important capabilities are inline inspection of prompts and uploads, real-time blocking or redaction, and clear user feedback that does not interrupt productivity. Visibility alone is insufficient in these environments, because sensitive data exposure happens at the moment of interaction rather than during storage or transfer.

Many organizations now use SaaS tools where AI features are enabled by default in CRM systems, support platforms, collaboration tools, and productivity suites. In these scenarios, coverage breadth and SaaS-native discovery matter most. Effective AI data security companies must understand how embedded AI features access and generate data across multiple applications, rather than focusing on a single AI interface.

Organizations operating under GDPR, HIPAA, PCI DSS, or similar frameworks need enforceable governance and audit-ready reporting. The priority here is traceability; being able to demonstrate which policies exist, how they are enforced, and where sensitive data is prevented from entering AI systems. Solutions that rely primarily on documentation or manual processes struggle to meet audit expectations in AI-driven environments.

Fast-moving teams often adopt AI aggressively to accelerate output, which increases exposure if controls add friction. In these environments, low-friction deployment models and minimal user disruption are critical. Capabilities such as agentless architectures, fast onboarding, and inline controls that operate transparently help maintain security without slowing teams down.

Matching the right AI data security company to your organization requires aligning capabilities with real usage patterns rather than abstract requirements. When security controls reflect how AI is actually used, organizations can reduce risk while still enabling innovation at scale.

Many organizations struggle with AI data security not because they lack tools, but because of flawed assumptions made during the buying process. As AI adoption accelerates, security teams often apply legacy evaluation frameworks to fundamentally new data flows. The result is a mismatch between perceived coverage and actual risk. The following mistakes are among the most common and most costly.

Avoiding these pitfalls requires reframing AI data security as an operational challenge rather than a theoretical one. Organizations that ground their vendor evaluations in real usage patterns are far more likely to achieve lasting risk reduction.

Some platforms are designed specifically for the intersection of AI data governance, DSPM, and AI DLP. Rather than treating AI as a standalone risk or focusing on a single control layer, these solutions address how sensitive data actually moves through AI-enabled SaaS workflows. This category has emerged in response to the limitations of tools that offer visibility without enforcement or policies without technical control.

These platforms start with understanding sensitive data in the context of AI usage. Discovery and classification are SaaS-native and API-driven, enabling visibility into where regulated or proprietary data lives and where it is likely to be used by AI features. This foundation allows security teams to reason about AI risk based on real data flows rather than assumptions.

Governance is enforced through technical controls that reflect how employees and systems actually interact with AI. Policies are applied to prompts, uploads, and AI-enabled workflows instead of existing as static documentation. This approach aligns governance intent with operational reality.

Enforcement occurs at the moment AI risk materializes. Inline inspection of data in motion allows platforms in this category to block, redact, or warn before sensitive information reaches an AI system or is generated in outputs. This distinguishes them from alert-only approaches that respond after exposure has already occurred.

A SaaS-native, agentless design reduces deployment friction and operational overhead. By integrating directly with cloud and SaaS platforms, these solutions can scale with AI adoption without requiring invasive endpoint agents or complex infrastructure changes.

Detailed logging and traceability provide evidence of how policies are enforced across AI-enabled workflows. This supports regulatory requirements by demonstrating not just intent, but consistent, repeatable control over sensitive data.

Platforms in this category reflect a broader shift in the AI data security market. As AI becomes embedded across everyday SaaS applications, effective protection increasingly depends on unifying governance, discovery, and enforcement into a single operational model rather than relying on isolated point tools.

As organizations move from experimenting with AI to deploying it across production SaaS workflows, the definition of AI data security has expanded. The leading AI data security companies differ significantly in how they approach governance, discovery, and enforcement. The list below highlights five vendors operating in this space, ranked by how comprehensively they address AI-driven data risk across modern enterprise environments.

Brief description

Strac is an AI data security platform designed to secure sensitive data as it moves through AI-enabled SaaS workflows. Rather than treating AI as a standalone risk, Strac focuses on governance, discovery, and real-time enforcement across prompts, uploads, and AI-generated outputs within everyday business tools.

Core use cases

Key strengths

Key weaknesses

Brief description

Securiti is a data governance and privacy automation platform with strong capabilities in data mapping, compliance workflows, and policy management. It is often evaluated by organizations prioritizing regulatory alignment and enterprise-scale governance programs.

Core use cases

Key strengths

Key weaknesses

Brief description

BigID is a well-established data discovery and classification platform widely used to identify sensitive data across large-scale enterprise environments. It plays a foundational role in many DSPM and data intelligence strategies.

Core use cases

Key strengths

Key weaknesses

Brief description

Cyera is a modern DSPM platform focused on identifying and reducing data risk across cloud environments. It emphasizes rapid visibility into sensitive data exposure and misconfigurations.

Core use cases

Key strengths

Key weaknesses

Brief description

Concentric AI specializes in context-aware DSPM, using semantic analysis to prioritize sensitive data risk. It is commonly evaluated by organizations seeking improved signal quality in data risk management.

Core use cases

Key strengths

Key weaknesses

AI data security companies are not interchangeable. The right choice is determined by how effectively a platform can see, govern, and enforce controls across AI-driven data flows that now run through prompts, copilots, SaaS-embedded AI features, and generated outputs. Organizations that evaluate vendors based on real AI usage patterns; rather than legacy categories or feature checklists; are far better positioned to reduce risk while continuing to scale AI adoption and innovation safely.

AI data security companies protect the sensitive data pathways created by AI adoption; not just the AI model itself. In practice, they focus on preventing regulated data, IP, credentials, and customer information from being exposed through AI-driven workflows across SaaS tools and employee usage. What matters is whether protection applies to the real places data moves today; prompts, uploads, context, and AI outputs; rather than only to traditional file or email channels.

AI data security companies differ from traditional DLP vendors because AI introduces runtime, context-dependent exposure that legacy DLP wasn’t designed to control. The key differences typically show up in:

Yes, but only if the platform is designed to cover AI usage where it actually occurs; not just where it is easiest to monitor. Buyers should validate three things in sequence; because gaps usually appear here first.

Yes, many can support GDPR or HIPAA compliance, but the value depends on whether the platform provides enforceable controls and audit evidence; not just policy templates. For GDPR, that often means reducing unauthorized exposure of personal data and improving traceability across SaaS and AI workflows. For HIPAA, it typically means preventing PHI from entering AI tools without appropriate safeguards, and maintaining clear logs of enforcement decisions for compliance reviews.

Deployment time varies widely based on architecture and scope, but most rollouts follow a similar pattern. For organizations prioritizing speed, the practical question is how quickly you can move from “visibility” to “enforced controls” without disrupting users. In general, timelines are driven by:

.avif)

.avif)

.avif)

.avif)

.avif)

.gif)