AI Model Red Teaming

A structured testing process to identify flaws & vulnerabilities in AI systems, typically performed in a controlled environment by dedicated teams using adversarial methods.

A structured testing process to identify flaws & vulnerabilities in AI systems, typically performed in a controlled environment by dedicated teams using adversarial methods.

Measures protecting websites and web applications from security threats and vulnerabilities.

Technology that screens and excludes harmful or inappropriate web content.

A weakness or fault in a system, application, or process that could be exploited by malicious actors to attain unauthorized access, steal data, or disrupt operations. This can include software bugs, misconfigurations, weak passwords, or design flaws that compromise security.

Weaknesses in a system that could be exploited by threats.

Virtual Private Network - encrypted connection over the internet from a device to a network.

Voice phishing attacks conducted through phone calls.

An isolated section of a public cloud where organizations can run resources in a virtual network.

Information that doesn't follow a predefined data model or organization.

Data repositories that exist outside of an organization's formal management and security controls.

Meeting the requirements of the Sarbanes-Oxley Act for financial reporting and corporate governance.

Cloud based application delivery model where applications are accessed via the internet.

The capture and storage of social media communications for compliance and record-keeping.

Psychological manipulation techniques used to deceive people into revealing confidential information.

A framework ensuring service organizations securely manage customer data.

Phishing attacks conducted through SMS text messages.

An authentication method allowing users to access numerous applications with one set of credentials.

Cloud services used by employees without formal IT department approval or oversight.

Hardware or software used within an organization without IT department approval.

Sensitive information that exists outside of an organization's managed systems and security controls.

Data that must be protected from unauthorized access to safeguard privacy or security.

The continuous monitoring and improvement of an organization's overall security status.

Tools that enable organizations to collect security data and automate security operations.

A facility where information security experts monitor, analyze, and protect organizations from cybersecurity threats.

A system supplying real-time analysis of security alerts generated by network hardware and applications.

A technique that combines language models with external knowledge retrieval to generate more accurate and contextual responses.

A group that helps organizations improve security by simulating real-world attacks.

Techniques used to bypass AI systems' built-in restrictions and safeguards.

A type of attack targeting AI systems through manipulated input prompts.

The exploiting of bugs or design flaws to gain elevated access to resources.

Protected Health Information - health data protected under HIPAA regulations.

Any data that could potentially recognize a specific individual.

Authorized simulated cyberattack to evaluate system security.

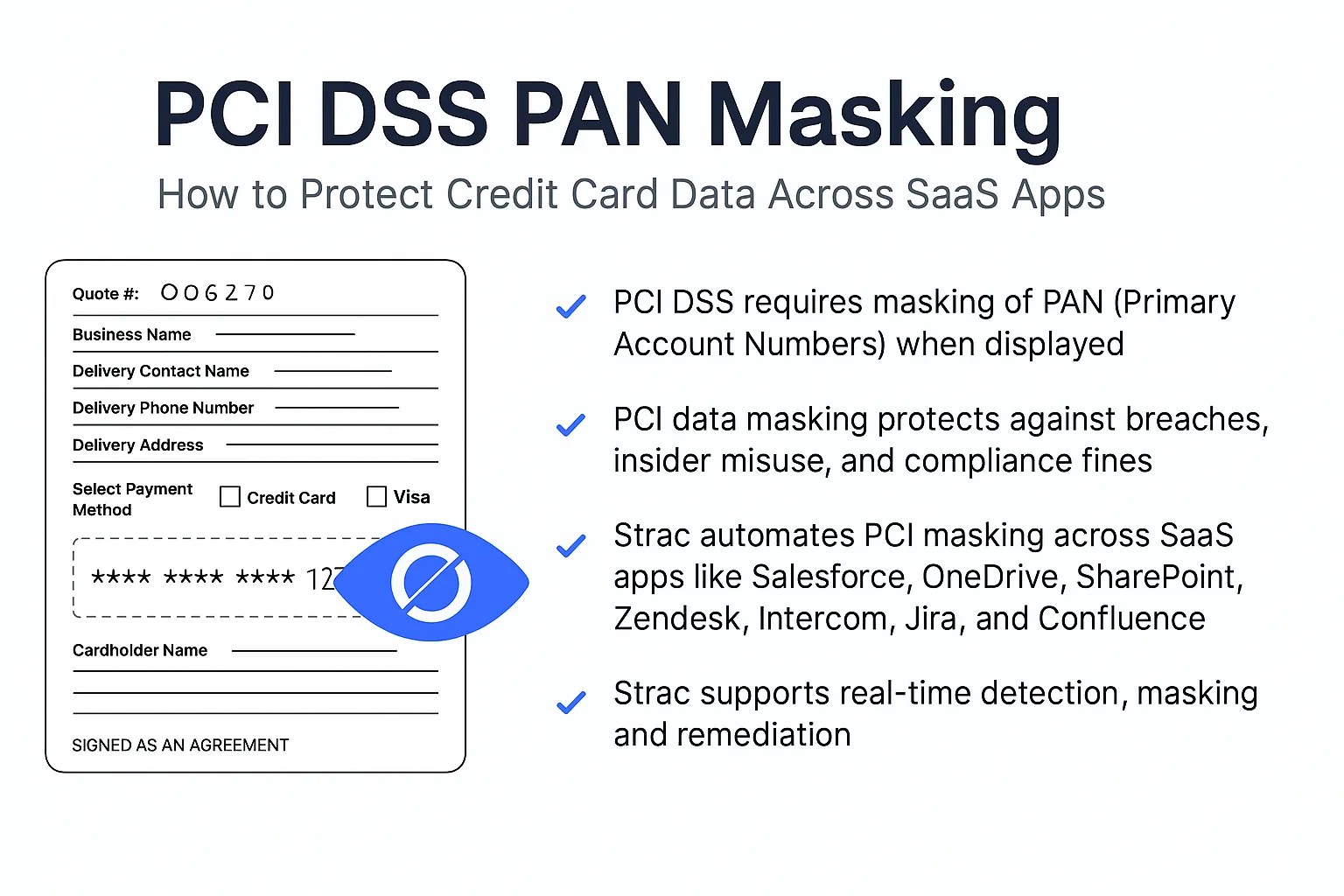

Payment Card Industry Data Security Standard - security standards for organizations handling credit cards.

Abidance to the Payment Card Industry Data Security Standard requirements.

Information that has been deliberately made difficult to understand.

New York's requirements for financial institutions' cybersecurity programs.

National Institute of Standards & Technology - organization that develops cybersecurity standards.

Measures taken to protect the usability and integrity of computer networks and data.

Data Loss Prevention solutions that monitor and protect data moving through network traffic.

The unauthorized extraction or copying of machine learning models.

Software for managing and securing mobile devices in an enterprise environment.

A globally-accessible knowledge base of adversary tactics and techniques.

Security vulnerabilities resulting from incorrect system or application settings.

A cloud security platform providing threat protection for cloud workloads.

A tool that helps organizations discover and manage external digital assets.

A security system requiring multiple forms of verification to grant access.

Information that has been modified to hide sensitive elements while maintaining a similar structure.

A cyberattack where attackers secretly intercept and relay communications between two parties.

Malicious software created to damage, disrupt, or gain unauthorized access to computer systems.

Files attached to emails containing harmful code or malware.

Technology enabling systems to learn and improve from experience without explicit programming.

Security principle of giving users only the minimum access rights needed for their work.

Advanced AI models trained on broad amounts of text data to understand & generate human like language.

Malicious software that records keystrokes to capture sensitive information.

Adherence to requirements set by laws, regulations, and industry standards for IT systems.

Security risks posed by individuals with legitimate access to an organization's systems.

Documented guidelines for protecting an organization's information assets.

Security solutions focused on detecting and responding to identity-based threats.

Framework of policies and technologies managing digital identities and access rights.

The human element of cybersecurity where employees act as a defense against security threats.

A form of encryption allowing computations on encrypted data without decrypting it.

Health Information Technology for Economic & Clinical Health Act - legislation that strengthens HIPAA enforcement.

Meeting the requirements set forth by HIPAA for protecting healthcare information.

Health Insurance Portability & Accountability Act - U.S. legislation that protects medical information privacy.

Common issues in AI systems where they generate false information, show inconsistent behavior, or display unfair prejudices.

Security measures and considerations specific to Google's Bard AI language model.

AI systems capable of creating new content, including text, images, code, or other data types.

The General Data Protection Regulation - EU's comprehensive data protection and privacy regulation.

The process of adjusting AI models to boost their performance for specific tasks or domains.

An incorrect identification of a threat or violation when none actually exists.

The unauthorized transmission of data from a computer or network to an external location.

A DLP technique that identifies sensitive data by matching it exactly against known values.

An independent supervisory authority responsible for monitoring EU institutions' processing of personal data.

An independent European body that maintains consistent application of data protection rules.

A framework for regulating transatlantic exchanges of personal data for commercial purposes.

Comprehensive protection of an organization's network, data, and assets from security threats.

Protection of network endpoints against cybersecurity threats.

Security technology that monitors and responds to suspicious activities on endpoint devices.

DLP solutions that protect data on end-user devices like laptops and mobile devices.

The tracking and analysis of user interactions with applications and systems.

The process of converting information into a code to stop unauthorized access.

Information that has been transformed into a scrambled format that can only be read with the right decryption key.

The generation of email messages with a fake sender address to deceive recipients.

Comprehensive measures to protect email systems from unauthorized access, loss, or compromise.

Security measures designed to defend against email-based threats and protect sensitive information.

Cyber attacks where criminals pose as trusted senders to deceive recipients.

The automated processing of emails to remove spam and malicious content before delivery.

The process of encoding email messages to protect their content from unauthorized access.

Security protocols that verify the legitimacy of email senders and prevent email spoofing.

Rules and configurations that define how Data Loss Prevention solutions identify and protect sensitive information.

The process of collecting, preserving, and analyzing digital evidence for investigative purposes.

A cybersecurity strategy that employs numerous layers of security controls to protect data.

Distributed Denial of Service - a cyber strike that floods systems with traffic to make them unavailable to legitimate users.

The unauthorized copying, transfer, or retrieval of sensitive data by malicious actors.

An individual whose personal data is being collected, held, or processed.

A repository for persistently storing & managing collections of data which includes databases, data lakes, and file systems.

The uncontrolled spread of data across multiple locations, devices, and cloud services.

A framework for continuously monitoring and improving an organization's data security status.

An integrated solution that provides comprehensive protection for an organization's data assets.

Protective measures applied to prevent unauthorized access to databases, websites, and computers.